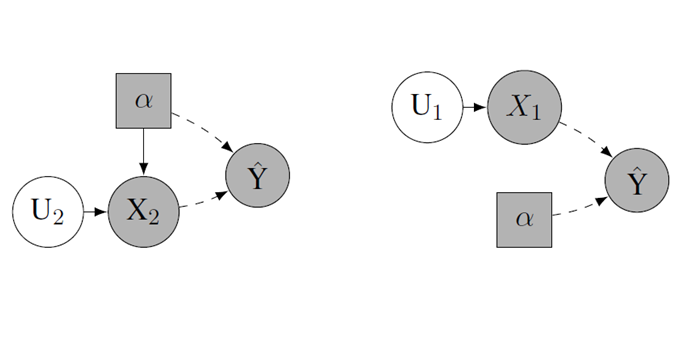

A crucial step towards perspicuity of data-driven AI systems is to improve our understanding of why such a system acts the way it does. In this regard, counterfactual explications are promising. Yet, they may not enable human stakeholders to act upon resulting explanations, nor do they address stakeholders’ contextual needs. In Project A6 we aim to move from counterfactual explications to contextualised actionable explications. We develop the mathematical foundations of actionable explications for machine learning algorithms: explications that uncover decision-relevant reasons that are feasible for humans to act upon. In empirical studies, we test and improve our approach with respect to the usefulness of resulting explanations from the perspective of different stakeholders.

A6 – Contextualised Actionable Explications