In 2019, CPEC embarked on a research mission to explore the foundations of perspicuous computing. At its roots has been the observation that the explosion of opportunities for software-driven innovations comes with an implosion of human opportunities and capabilities to understand and control these innovations.

We identified the root cause of the problem: Contemporary IT- and AI-based systems, subsystems, and applications do not have any built-in concepts to explicate their behaviour. They generate and propagate outcomes of computations, but are not meant to provide explications, justifications, or plausibilisations of the outcomes. They are not perspicuous.

Since then, CPEC has been working on the formidable scientific challenge of seamless support for perspicuity. We have studied perspicuity across the entire system lifecycle, spanning design-time, run-time, and, in case of a malfunctioning or an optimisation effort, inspection-time. We have laid the scientific foundations for designing, analysing, and interacting with perspicuous systems, computerised systems that explicate their functioning.

Here are three examples of how we work in CPEC:

CPEC is structured in three project groups, each of them addressing a different facet of perspicuous computing research. Below, you can see the project groups as well as the individual projects themselves.

Analyse & Explicate – Project Group A

The project group Analyse & Explicate lays the foundations for analysis techniques as well as explication support across the entire system lifecycle, identifying and explicating causes and effects, thereby establishing the algorithmic principles of perspicuity for human stakeholders.

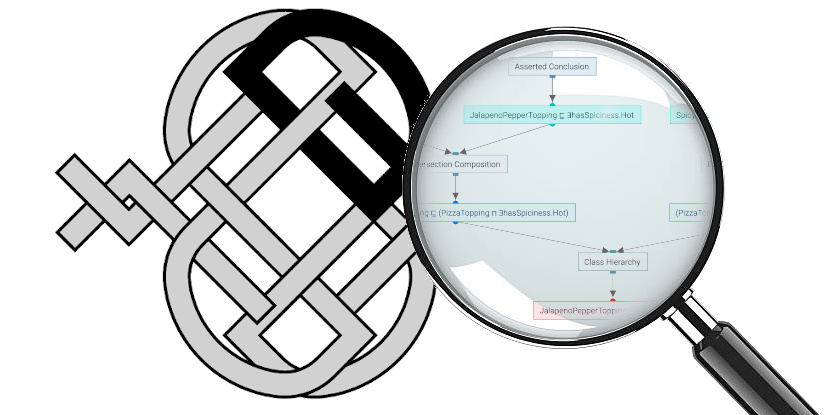

Classical verification techniques that aim at determining whether a system (model) satisfies a given specification (or not) are often unsatisfactory in practice. Perspicuous verification techniques…

Read MorePrincipal Investigators: Christel Baier, Rupak Majumdar, Joël Ouaknine

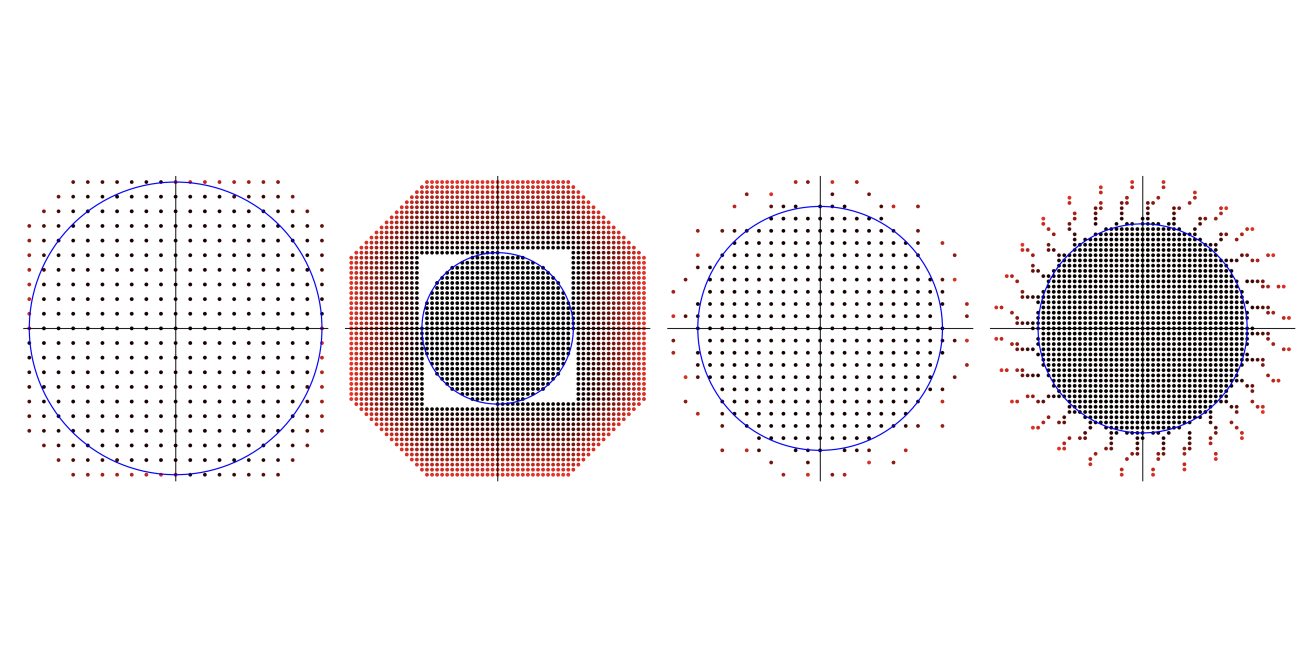

Knowledge representation based on Description Logics provides means to describe the current state of a cyber-physical system and its environment, as well as background information…

Read MorePrincipal Investigators: Franz Baader, Stefan Borgwardt, Markus Krötzsch, Antonio Krüger

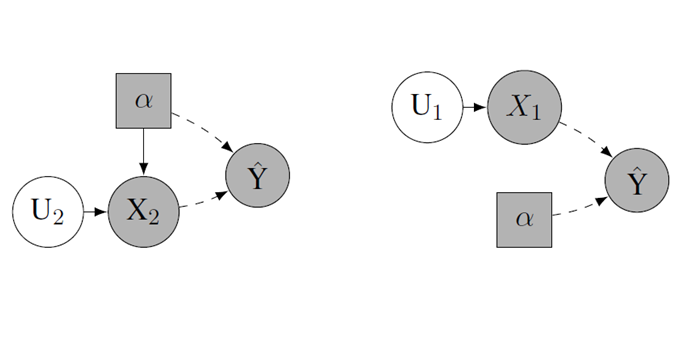

A crucial step towards perspicuity of data-driven AI systems is to improve our understanding of why such a system acts the way it does. In…

Read MorePrincipal Investigators: Markus Langer, Isabel Valera

Construct – Project Group C

The project group Construct lays the foundations for the construction of perspicuous computing systems, developing methodologies that support a high-level understanding of the relevant factors determining the system’s behaviour.

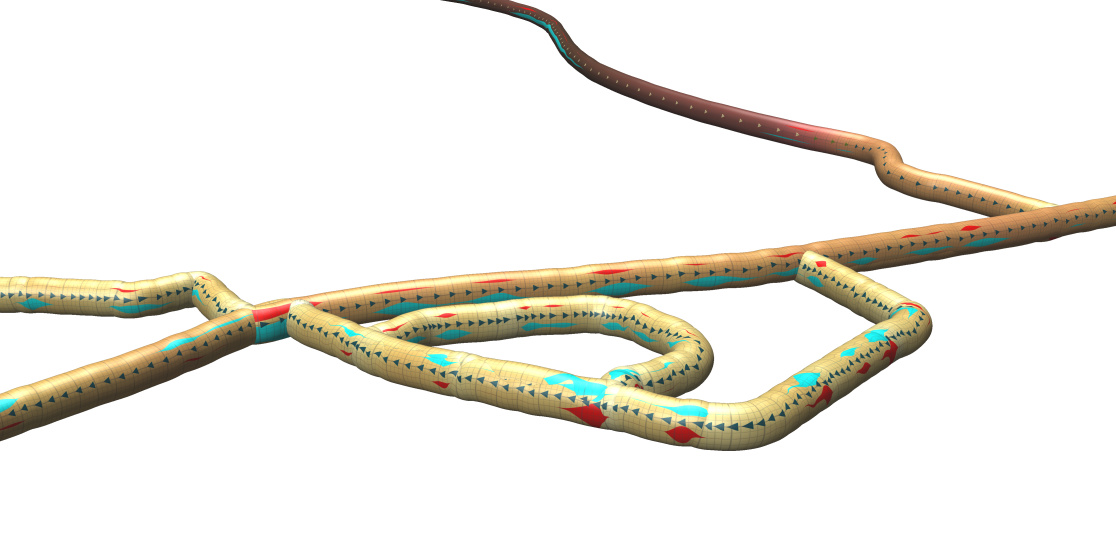

Project C1 provides programming abstractions enabling perspicuity at the implementation level for CPS applications acting on the physical world. We will integrate stream-based monitoring languages…

Read MorePrincipal Investigators: Bernd Finkbeiner, Stefan Gumhold, Rupak Majumdar

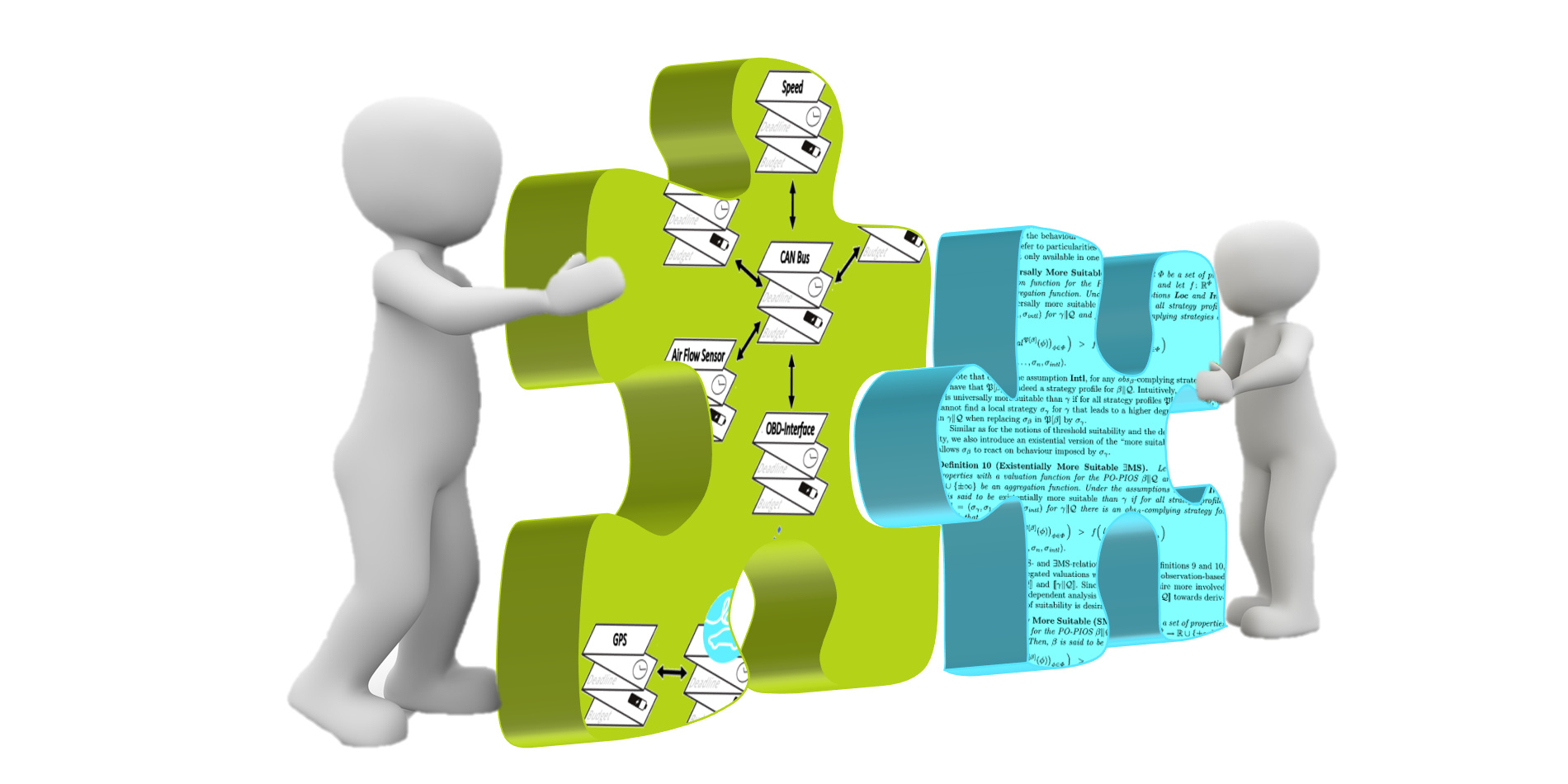

Project C2 harvests foundational advances on quantitative behavioural properties related to component suitability, putting in focus how formal and empirical approaches can be integrated towards…

Read MorePrincipal Investigators: Sven Apel, Christel Baier, Holger Hermanns

Future cyber-physical systems are expected to be adaptable in the field without sacrificing dependable operation. This project looks into ways to enable the rapid deployment…

Read MorePrincipal Investigators: Christof Fetzer, Holger Hermanns

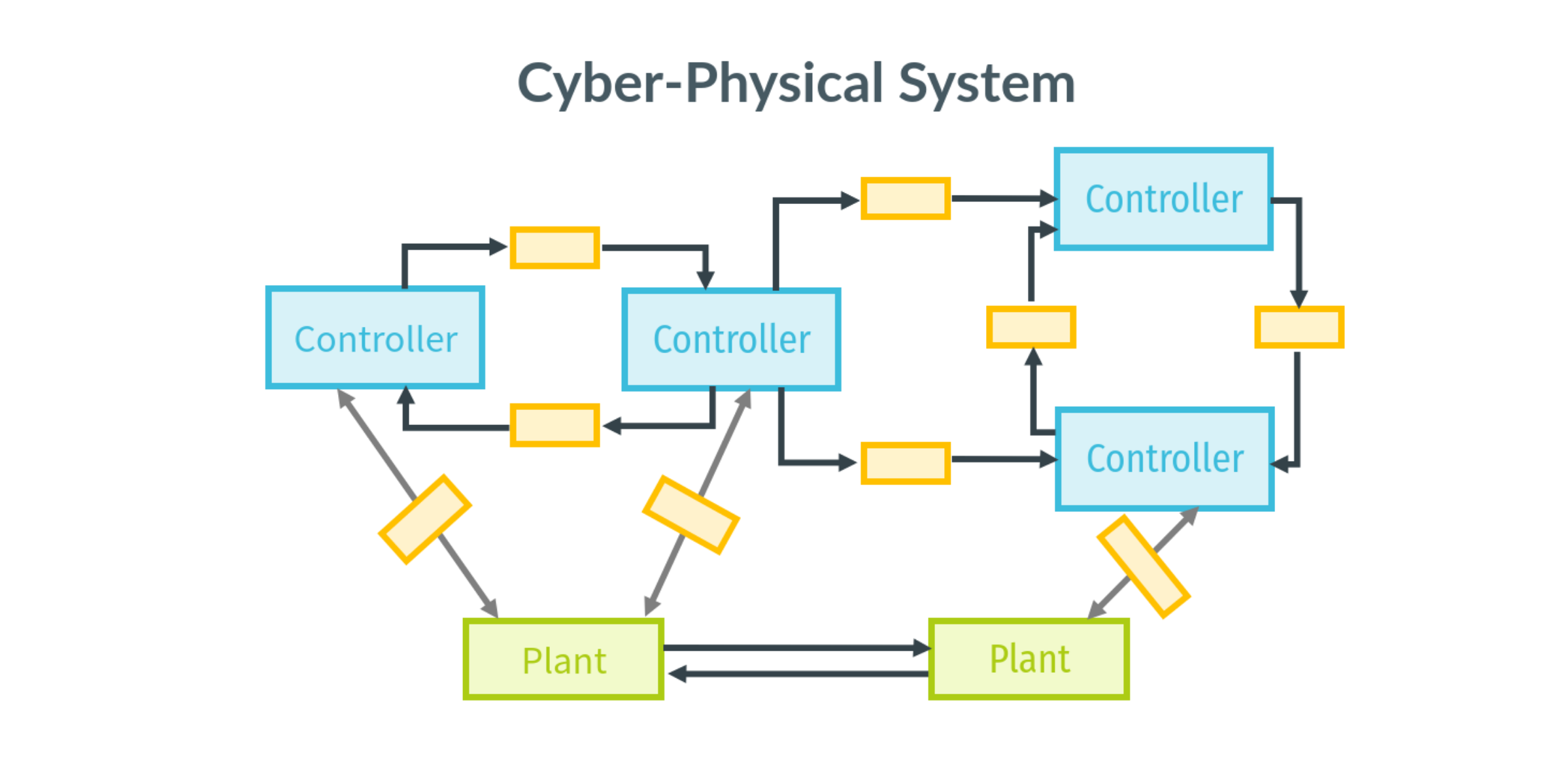

Understanding and explicating the knowledge and information flow between distributed physical and digital components plays a fundamental role in the perspicuous design of cyber-physical systems.…

Read MorePrincipal Investigators: Bernd Finkbeiner, Anne-Kathrin Schmuck

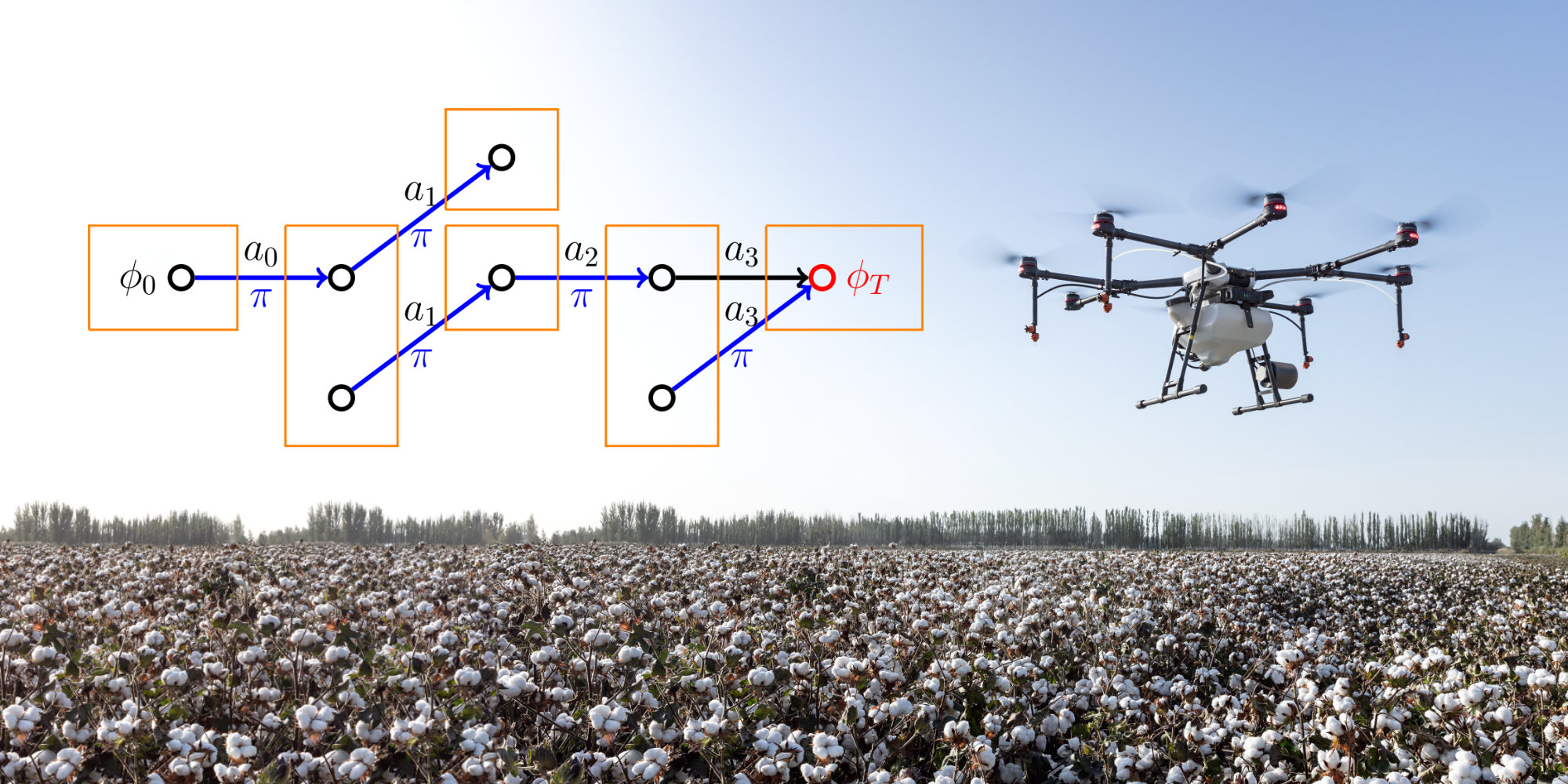

AI systems choosing actions in dynamic environments need to react to environment behaviour in real-time. Neural networks are rapidly gaining traction in tackling this, by…

Read MorePrincipal Investigators: Maria Christakis, Jörg Hoffmann, Isabel Valera

Explain & Interact – Project Group E

The project group Explain & Interact develops advances in human-computer interaction, visualisation, natural language processing, psychology, and law research with respect to the seamless design, control, and inspection of cyber-physical systems with the goal of achieving perspicuity for diverse types of users, in diverse system settings, enabling the fulfilment of societal desiderata and norms throughout the entire lifecycle of such systems.

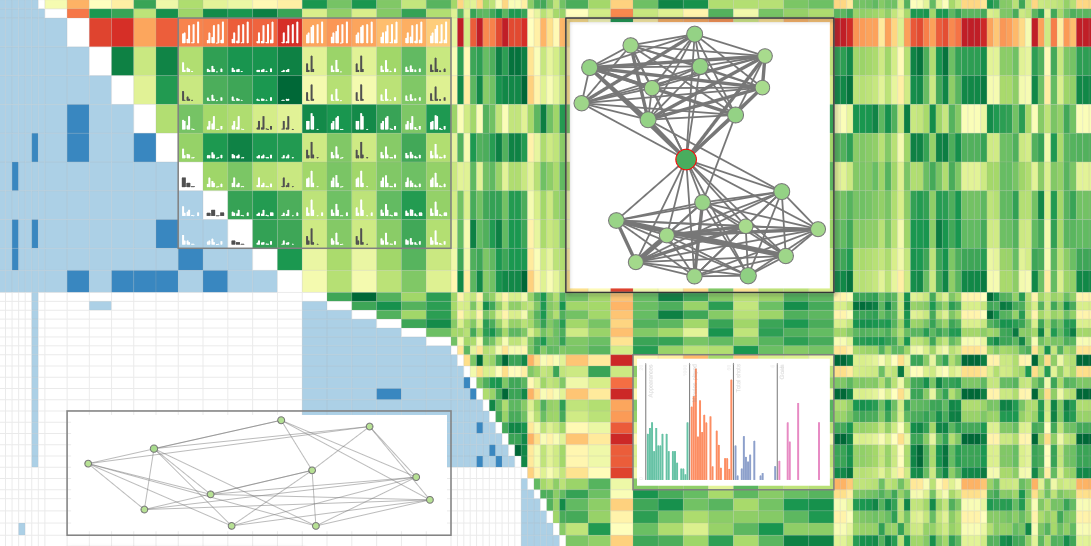

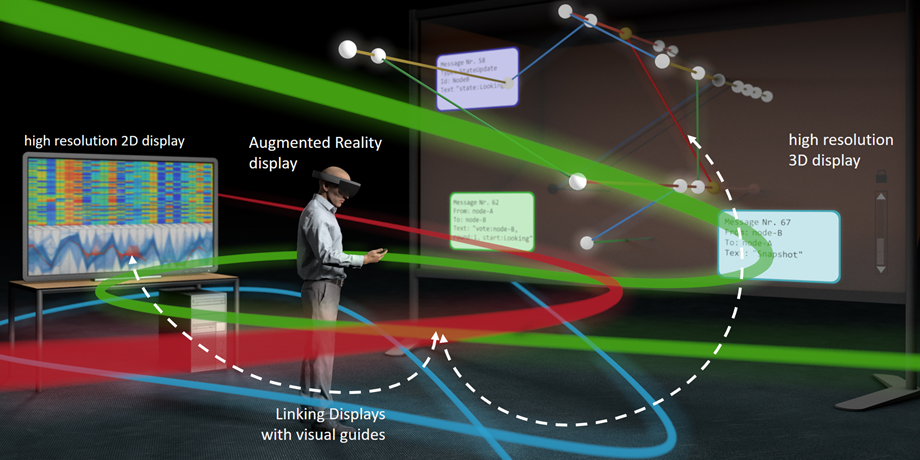

This project aims at developing novel visualisation concepts that facilitate human-centred explanation of formal explications of logic-based approaches. We will conduct visualisation research for formal…

Read MorePrincipal Investigators: Franz Baader, Christel Baier, Raimund Dachselt

Project E2 designs safe human-to-machine handover techniques in mixed-initiative control and addresses the recent trend to human oversight in autonomous vehicles and aviation. If a…

Read MorePrincipal Investigators: Stefan Borgwardt, Vera Demberg, Antonio Krüger

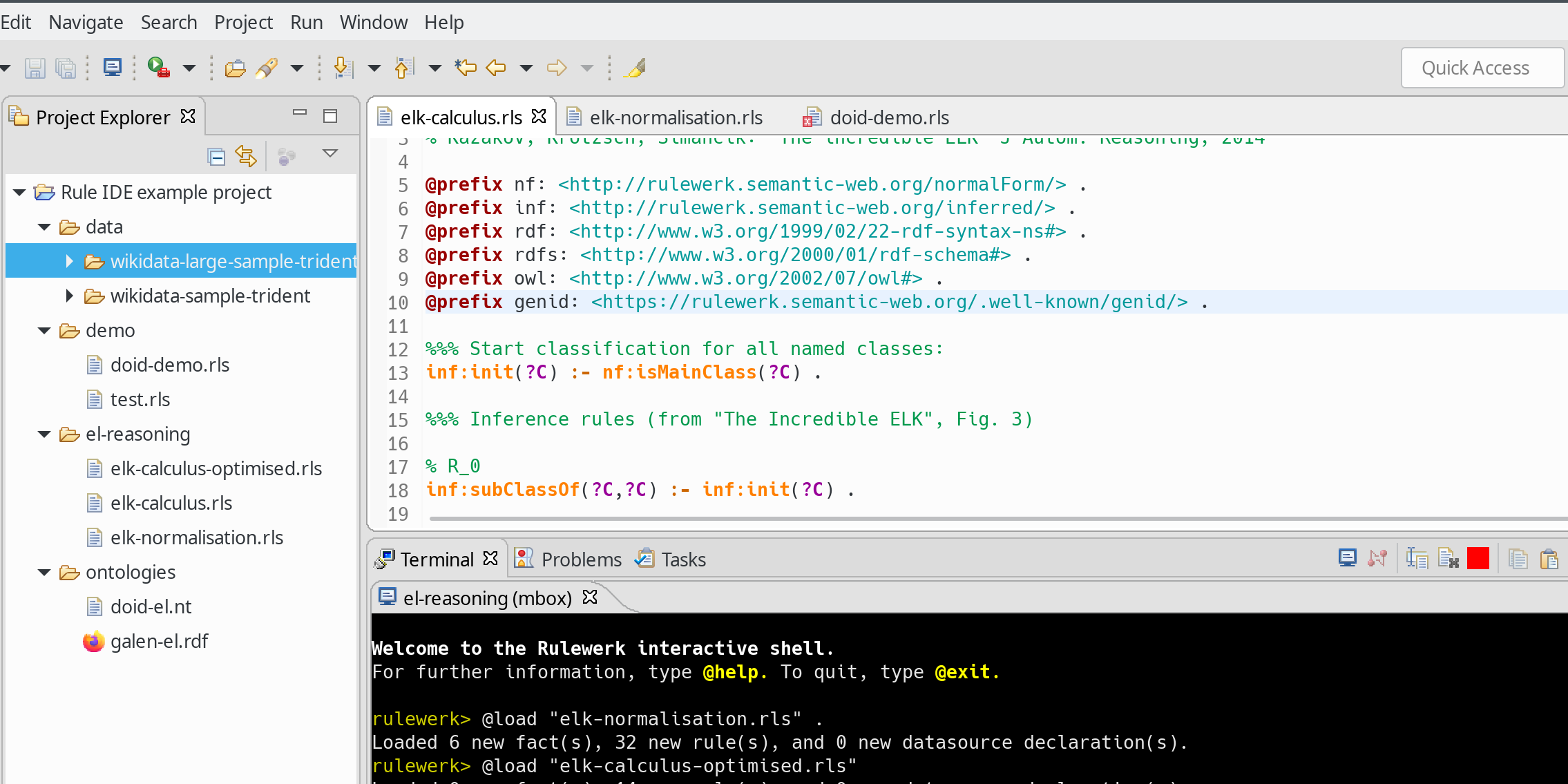

Project E3 studies computational formalisms and develops suitable visual systems for inspecting informational resources in efficient and understandable ways. Central to our approach are declarative…

Read MorePrincipal Investigators: Sven Apel, Raimund Dachselt, Markus Krötzsch

Project E4 focuses on CPS scenarios where spatio-temporal concepts like distance, orientation, velocity, and visibility play an important role, and where a large number of…

Read MorePrincipal Investigators: Raimund Dachselt, Stefan Gumhold, Jörg Hoffmann

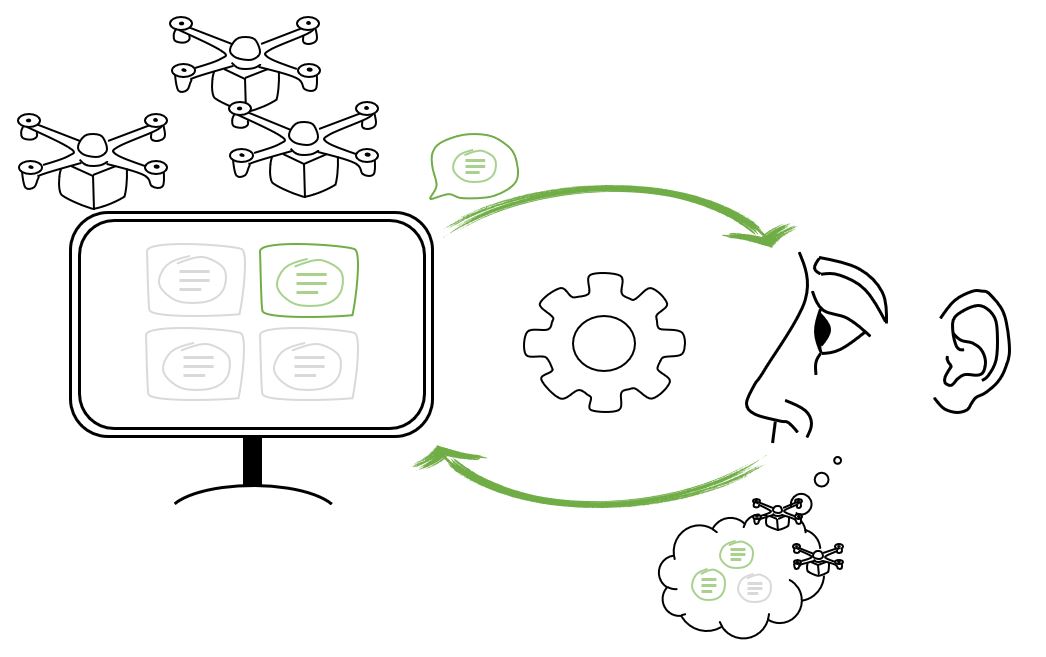

Project E6 focuses on how to effectively communicate information between automated systems and human users overseeing them, e.g., in drone control centres. It develops an…

Read MorePrincipal Investigators: Vera Demberg, Anna Maria Feit, Jörg Hoffmann

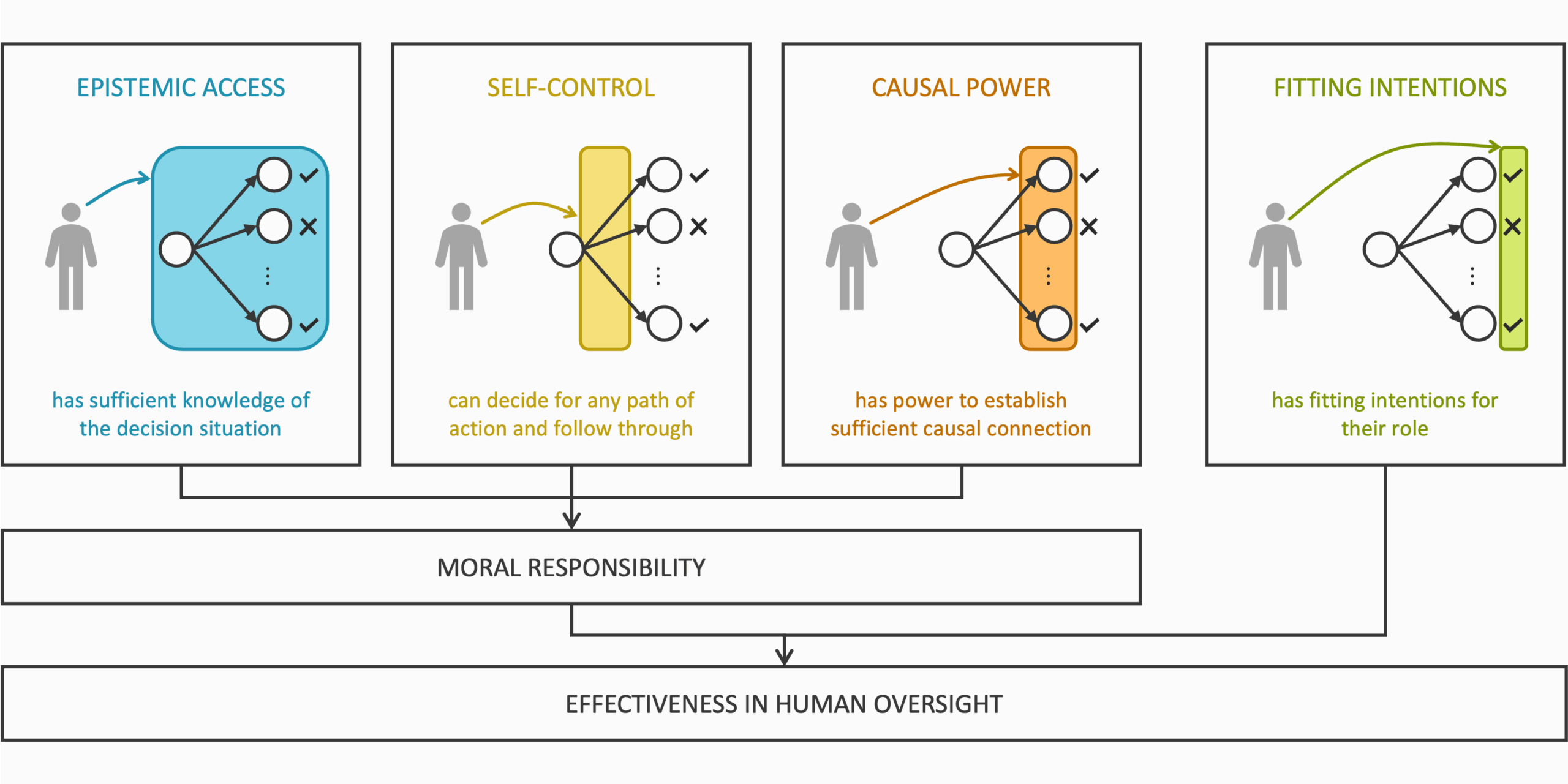

Project E7 augments the predominantly computational focus of TRR 248 by an interdisciplinary interface to societal desiderata. This will be achieved by a tight integration…

Read MorePrincipal Investigators: Holger Hermanns, Anne Lauber-Rönsberg

Completed Projects

The following projects were completed at the end of the first funding phase.

The vision of the project is to provide an algorithmic framework for the analysis of dynamical and hybrid automata models and corresponding explication mechanisms. While…

Read MorePrincipal Investigators: Christel Baier, Joël Ouaknine

The vision of the project is to develop a new theory for probabilistic causation in stochastic automata models with nondeterminism. It will yield the foundations…

Read MorePrincipal Investigators: Christel Baier, Bernd Finkbeiner, Rupak Majumdar

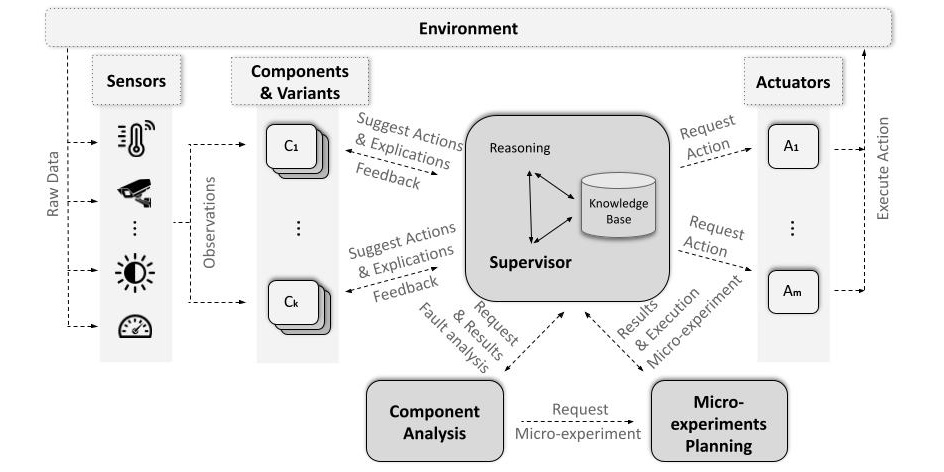

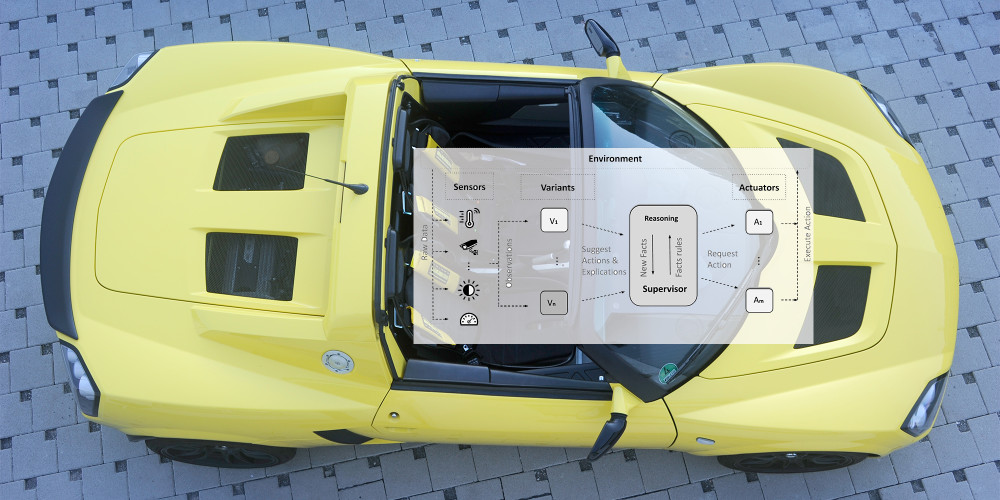

This project investigates perspicuous supervision of technical systems, carried out in a read data—analyse—react loop (RAR loop) several times per second. Our vision is a…

Read MorePrincipal Investigators: Christof Fetzer, Markus Krötzsch, Christoph Weidenbach

The goal of this project is an explication framework for machine learning techniques with a particular focus on neural networks. As current classifiers are extremely…

Read MorePrincipal Investigators: Stefan Gumhold, Matthias Hein

This project aims to improve understandability of erroneous behaviour in cyber-physical systems using program analysis techniques. Toward this, first, we will establish the fundamental tools…

Read MorePrincipal Investigators: Maria Christakis, Christof Fetzer